|

Marginalia |

|

|

|

Atlantic City Method For Computing π |

|

Here's the slowest, most inefficient method known for computing π: Choose an arbitrary initial value x (real) and iterate the mapping x → (2−x)/(3−4x) for a total of M times. Count the number of iterates that fall within a small interval around zero. For example, let N denote the number of iterates that fall between −u/2 and +u/2 for some small u. Then the limiting value of the ratio uM/N is π (for sufficiently large M as u goes to zero). |

|

|

|

On Even Fibonacci Numbers |

|

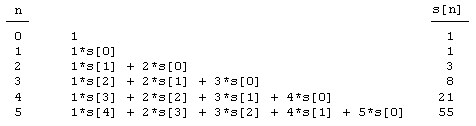

Beginning with the integer s[0] = 1, construct a sequence of integers such that the nth term s[n] is the sum of (k+1) s[n−k] for k=1 to n−1. Thus we have |

|

|

|

|

|

|

|

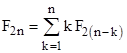

Interestingly, s[n] turns out to be just the even-ordered Fibonacci number F2n. (I've taken the liberty of setting F0 = 1 and F1 = 0, reversing the customary order of the initial values.) Thus we have the identity |

|

|

|

|

|

|

|

Logrithmic Integral and a Recurrence |

|

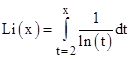

It's well known that the logrithmic integral, defined as |

|

|

|

|

|

|

|

gives a good approximation for the number of primes less than x. Also, we know that the average gap between consecutive primes near x is roughly ln(x). Therefore, the size of the Nth prime can be approximated by the Nth term in the sequence s[k] whose values beginning with s[0] = 2 are defined recursively by s[k] = s[k−1] + ln(s[k−1]) for k=1,2,... Consequently, the value of Li(s[n]) should be close to n. More precisely, it appears that Li(s[n]) ~ (n−1). What are the best error bounds on this approximate equality? |

|

|

|

Zero-Order Remainders |

|

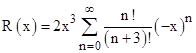

The series expansion of (1+x)2 can be written as |

|

|

|

|

|

|

|

where every term after the third has a zero in the numerator. Factoring the zero out of the remainder terms, we have (1+x)2 = (1 + 2x + x2) + (0) R(x) where |

|

|

|

|

|

|

|

This converges for |x| < 1, but what, if any, significance it has relative to (1+x)2, I don't know. A similar "order zero" remainder function can be associated with any finite expansion. |

|

|

|

Trisection On A Budget |

|

It's well known that there is no procedure using just straight-edge and compass for trisecting an arbitrary angle in a finite number of steps. However, we can certainly trisect an arbitrary angle to within any arbitrary precision by means of very simple straight-edge and compass operations. One approach is to simply bisect the angle, then bisect the left half-angle, then the right quarter-angle, then the left eighth-angle, and so on. The net result is 1/2 - 1/4 + 1/8 - 1/16 + 1/32 - ..., which differs from 1/3 by less than 1/2n after n bisections. This is not terribly elegant, but it's easy to remember and gives a construction with as much (finite) precision as desired. With 30 bisections the result would be within 1 part in 109 of an exact trisection. |

|

|

|

Inside a Tetrahedron |

|

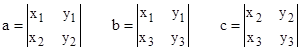

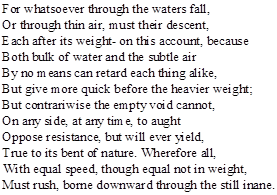

Given four points (0,0), (x1,y1), (x2,y2), and (x3,y3) in the plane, one of the points lies inside the triangle formed by the other three iff abc(a−b+c) < 0 where a,b,c are the three determinants |

|

|

|

|

|

|

|

In three dimensions, given five points (0,0,0), (x1,y1,z1), (x2,y2,z2), (x3,y3,z3), (x4,y4,z4) in space, is it possible to express a necessary and sufficient condition that any one of these points is inside the tetrahedron formed by the other three in terms of the four determinants |

|

|

|

|

|

|

|

with (i,j,k) = (1,2,3), (1,2,4), (1,3,4), and (2,3,4)? |

|

|

|

Congruences on 4th Order Recurrences |

|

Given a fourth order linear recurrence relation for which the resolvant cubic of the characteristic polynomial has a rational root, it's possible to derive explicit congruence conditions (mod p) for the p-th terms of the solution sequence. For example, consider the sequence of integers given by the recurrence formula sn = 2sn-1 – 4sn-3 – 2sn-4 with the arbitrarily chosen initial values s0 = s1 = s2 = s3 = 1. Notice that s31 equals 1107179136 which is divisible by 31. It can be shown that 31 is the only prime p for which sp is divisible by p. More generally, we can find all the primes p such that sp is congruent to some particular value, say 6000, modulo p. For this sequence it turns out that sp = 6000 (mod p) if and only if p is one of the five primes 29, 857, 1291, 36012017, or 58461031. Using the recurrence formula defined above, but with the new initial values s0 = 37, s1 = 19, s2 = 43, and s3 = 101, suppose we have an encryption system and the key is known to be the largest prime p such that sp = 5000 (mod p). How difficult would it be for someone to determine the key? |

|

|

|

Given Primes p,q, Find Pseudoprime pqr |

|

Suppose we're looking for a general way, given arbitrary primes p,q, to find a prime r such that pqr is a pseudoprime to one of a small set of bases. In principle this is just a finite search. Taking the base 2 as an example, we know 2r = 2 (mod r) for every prime r, so the condition that 2pqr = 2 (mod pqr) implies that 2pq = 2 (mod r). Therefore, r must be a divisor of 2(pq-1) - 1. For example, with p = 3 and q = 5 we need r to be a divisor of 214 - 1 = (3)(43)(127). Therefore, the only possible values of r that could make pqr a base-2 pseudoprime are 3, 43, and 127. It turns out that both 43 and 127 give base-2 pseudoprimes (645 and 1905). For another example, take p = 3 and q = 11. In this case r must be a divisor of 232 - 1 = (3)(5)(17)(257)(65537). It turns out that r = 257 gives the base-2 pseudoprime 8481. Of course, we can't really make use of this to test for the primality of n = pq by finding r, because finding the factors of 2(n-1) - 1 is more or less the same as knowing the factorization of n-1, and if we know that, we can already test for the primality of n using standard methods. |

|

|

|

A Conjecture on the Fermat Function |

|

For any positive integer n let F(n) denote the smallest absolute value of xn + yn + zn for non-zero integers x,y,z with distinct absolute values. The values of F(n) for n = 1 to 5 are 0, 14, 1, 98, and 12(?). Obviously for even values of n we have F(n) = 1 + 2n + 3n, which is not very interesting. For odd values of n the determination of F(n) seems non-trivial, considering that it is very difficult to say whether F(n) ever equals zero for any n>1. I'm not even sure that F(5) = 12, but certainly it is no greater than 12, in view of 135 + 165 + (−17)5. (Can anyone fill in some more values of F(n)?) We could also define F(n) as the smallest absolute value of xn + yn - zn where x,y,z are distinct positive integers. This gives an interesting function for even values of n as well. We might conjecture that |

|

|

|

|

|

|

|

for any distinct positive integers x,y,z and n > 5. This is stronger than Fermat’s original conjecture, where the right hand side was 0 for all n > 2. |

|

|

|

Closed Forms For the Logistic Map |

|

The logistic formula |

|

|

|

|

|

was first used by the Belgian sociologist and mathematician Pierre Francios Verhulst (1804-1849) to model the growth of populations with limited resources. More recently it has been studied extensively as an example of a simple formula exhibiting chaotic behavior. By means of trigonometric substitutions, it's not too difficult to determine closed form solutions for the logistic map for certain specific values of A. For example, with A = 4 we get |

|

|

|

|

|

|

|

With A = 2 the closed form solution is |

|

|

|

|

|

|

|

For A = -2 the nth iterate can be expressed as |

|

|

|

|

|

|

|

with the appropriate value of g. |

|

|

|

3→3 Mapping With No Crossed Lines |

|

Consider 6 points on a plane, labelled A, B, C and X, Y, Z, as shown below |

|

|

|

|

|

|

|

It is not possible to draw a line from each of A, B, C to each of X, Y, Z without any of the lines crossing. To prove this, note that such a configuration requires three parallel connections between A and B passing through the points X, Y, and Z. For any three non-crossing paths connecting two points, one of the paths must be contained entirely within the loop formed by the other two. Thus, without loss of generality we can assume that point Z is inside the loop AXBYA. Therefore the plane has been partitioned into three empty regions bounded by the loops AXBZA, AZBYA, and AXBYA. The point C must fall in exactly one of these three regions, which makes it inaccessible to Y, X, or Z respectively. This is actually a consequence of a theorem by Kuratowski which states that a graph is "planar" (i.e., contains no crossed lines) if and only if it contains no subgraph that is congruent with the completely connected graph K(5) or with the completely connected bi-graph K(3,3). The latter is the configuration of the given problem. |

|

|

|

On Random Chords |

|

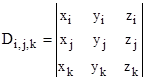

What is the probability that a randomly selected chord of a regular n-gon (n>3) is shorter than the side of the n-gon? This is a variation of a familiar class of problems, such as finding the probability that a "random chord" of a circle is longer than the radius, and as with all such problems it clearly depends on the assumed distribution of the available choices, which contains some ambiguity. One common definition of a "random chord" is to assume that the endpoints of the chord are uniformly distributed on the perimeter of the polygon. On this basis, there's a 1/n probability of the two ends of the chord falling on the same edge, and a 2/n probability of falling on adjacent edges, in which case the probability of the chord being shorter than an edge length is just the area in the first quadrant inside the ellipse x2 + 2cos(2π/n)xy + y2 = 1, giving an overall probability of |

|

|

|

|

|

|

|

Of course, other assumptions as to the distribution of "random chords" will give different answers. |

|

|

|

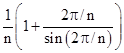

Lucretius on the Equivalence Principle |

|

Although Galileo (at the end of the 16th century) is often credited with being the first to recognize that all objects accelerate under the influence of gravity at the same rate, neglecting the drag effects of air or water, it seems clear that Lucretius was well aware of this fact in 50 BC. In De Rerum Natura he wrote |

|

|

|

|

|

|

|

The writings of Lucretius are believed to be primarily an exposition of the teachings of Epicurus (circa 300 BC), who in turn was influenced by Democritus centuries earlier, so the realization that all objects, regardless of weight, fall in vacuum at the same rate seems to be quite ancient. |

|

|

|

Computation and Physics |

|

A "computable number" is usually defined as a number for which there exists a finite machine with a finite program whose output converges on that number. However, from a physical standpoint, it’s questionable whether there is any such thing as a finite machine, because (for example) the range of gravitational interactions is infinite and cannot be shielded, so in principle there is no such thing as a perfectly isolated system. Further, even the local components of any physical machine can be represented by a state vector whose phase is always advancing (even if the state is stationary). Hence a “finite machine” is, at best, an idealization; no such thing actually exists. So, in order for the usual notions of computability to apply, we must restrict the class of “machines” to those that work repeatably. Notice that the usual definition of a computable number does not require the number to ever be completely computed, but merely that the output of the program converges on that number. For example, numbers such as the square root of 2 and π and e are considered computable, because we can specify finite and (putatively) repeatable programs that converge on these values. On this basis it is usually said that the "computable numbers" are countable, because each such number is uniquely implied by a finite machine with a finite program (written with a finite number of characters), and such machines and programs are obviously countable. However, the repeatability can never be demonstrated in such cases, since the process in question is infinite. We might just as well consider a “finite machine” consisting of a coin or a quantum mechanical device that prints out a string of binary digits 0.10011101... based on a sequence of coin tosses or quantum mechanical transitions. Admittedly, we can't conceptually extrapolate the value of the output of such a machine to infinity, so we can't talk about convergence in the usual way. All we ever have from a random process are the results already produced. The "ultimate" result of the process never "exists" in the same first-order Platonic sense that the ultimate result of a π-computing algorithm "exists" implicitly in the algorithm. For a random process, all numbers are equally implicit – at least within our understanding. One particular number only gets singled out on a finite basis as the successive digits are formed. On the other hand, we have no way of ruling out a deterministic “block universe”, so it may still be true that any particular machine running at any particular time and place may imply a definite infinite outcome, despite the fact that we don’t know how to predict it in advance. All knowledge (from a sufficiently robust point of view) is exclusive in nature, like the idea of a statue being “already there" in a raw block of stone, and all the sculptor does is remove the surrounding material. In an infinite block, is the countability of sculptures the same as the countability of material removals? |

|

|

|

One-One Mapping of Reals and Sequences |

|

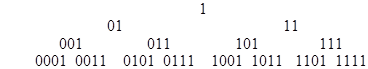

Some real numbers on the interval (0,1) possess two distinct binary representations. For example, the number 1/4 can be expressed as 0.0100000… or as 0.0011111… To establish a one-to-one mapping between the reals on the interval (0,1) and the set of semi-infinite binary sequences, we note that the two redundant sequences for a given real can be distinguished by their “most significant digit”. Thus we can map these two representations down to the next level. The sequences {x0111...} and {x1000...} are mapped to the distinct binary reals {.x01000...} and {.x11000...} respectively. The numerators on the first few levels are |

|

|

|

|

|

|

|

As we drop down to the left, we shift all but the right-most digit to the left and insert a “0” in the open space. As we drop down to the right, we shift all the digits to the left and insert a “1” in the right-most place. This is the same as applying 2x+1 to the right and 2x−1 to the left, remembering that the number of digits is increased by one at each level, adding zeros on the left if needed. Obviously each row consists of the odd integers less than 2n, each divided by 2n. We can get the same set of numbers (in a different order on each row) by simply applying 2x and 2x+1. Hence the binary digit reversals of the integers from 2n−1 to 2n – 1 are the odd integers from 1 to 2n – 1. |

|

|

|

This approach is particularly convenient for binary sequences because each "level" of terminating representations has twice as many members as the preceding level, so it's easy to define a mapping that absorbs the double representations. For other bases it's not quite as natural, but a similar method could be applied. In base B, the first level has B-1 members, and each successive level has B times the previous level. Therefore, it's necessary to carry down (2)(B-1) from the first to the second level, and (2+B)(B-1) from the second to the third level, and (2+B+B2)(B-1) from the third to the fourth level, and so on. At the (n+1)th level we need to carry (Bn + B - 2) sequences. This leaves just B-1 unmapped reals (from the first level), which shouldn't be too difficult to absorb. |

|

|

|

Maximizing A Symmetrical Function |

|

The June 1990 Issue of Mathematics Magazine presented several methods for determining the maximum value of the function |

|

|

|

|

|

|

|

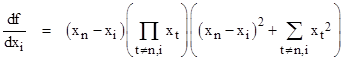

where x1 + x2 + ... + xn = 1 and each xj is non-negative. Most solutions were based on algebraic relations and induction arguments beginning with small values of n, but we can also approach this as an ordinary problem in the calculus of several variables. Using the slightly more general constraint x1 + x2 +... + xn = S we can eliminate xn and express f as a function of x1 through xj where j = n-1 as follows |

|

|

|

|

|

|

|

The function is zero on the boundary of the region with all xi greater than or equal to zero, and finitely positive on the interior, and a maximum occurs precisely when the partials of f with respect to each xi vanish, i.e., |

|

|

|

|

|

|

|

for k = 1, 2,…, n-1. Since the two right-hand factors are necessarily positive in the interior, this implies xk = xn for k = 1, 2, … , n-1. |

|

|

|

Finding Roots From Newton's Sums |

|

For any set of non-negative real numbers x1, x2,..., xn , suppose we are given the sums of the even powers of these numbers, i.e., we are given the values of |

|

|

|

|

|

|

|

for all positive even integers k. Can we determine the xi values as an explicit function of the ai values? Let yi = xi2 (i = 1,2,..,n) be the roots of the polynomial |

|

|

|

|

|

|

|

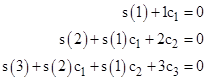

and let s(k) denote the sum of the kth powers of the roots. (Note that s(k) equals a2k.) Clearly s(0) = n. The other values of s(i) are related to the coefficients ci by Newton's Identities |

|

|

|

|

|

and so on. Thus, given s(1) through s(n) (which are equivalent to a2k for k = 1 to n), we can easily compute the coefficients ci. We can then solve this polynomial using any of the usual methods to determine the n roots yi, and the square roots of these values give the xi values, which were specified to be non-negative, so they are uniquely determined. |

|

|

|

Life in Stars |

|

How much complexity can exist inside a star? Presumably the temperatures are too high for the (long term) existence of complex chemical molecules, but what about different kinds of structures, perhaps involving persistent convection cells, or maybe even magnetic or electromagnetic activity? There must be some kind of "weather" inside the sun to account for things like "sunspots", solar flares, etc. Is it conceivable that the interior of a star could support structures with enough complexity to actually evolve and grow as distinct entities. (Even on Earth we give personalized names to hurricanes.) Could there be structures of a complexity equal to, say, the recursive patterns in cellular automata? Carrying this speculation to extremes, can we rule out the possibility of some form of consciousness evolving inside a star? Do the high temperatures make the requisite level of complexity impossible? If not, and considering how much of the matter in the universe is in the form of stars, is it possible that most conscious life in the universe is inside stars? Anything we would recognize as consciousness must have a means of interacting with things outside itself, and communication inside stars may be very difficult. Maybe neutrinos could provide a mechanism. |

|

|

|

Zeta Function and Harmonic Series |

|

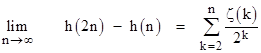

Recently someone asked about ways of computing Euler's constant, γ. This started me thinking about the partial sums of the harmonic series. Let h(n) denote the sum |

|

|

|

|

|

|

|

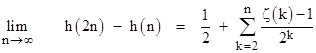

These sums are related to the zeta function by the formula |

|

|

|

|

|

|

|

Since ζ(k) approaches 1 as k increases, we can get faster convergence by re-writing this in the form |

|

|

|

|

|

|

|

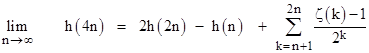

It seems that it should be possible to compute γ by making use of this relation. This could also be written as a recurrence |

|

|

|

|

|

|

|

Notice that, for example, ζ(40) = 1.00000000000090949478..., so the terms [ζ(k)-1]/2k quickly become very small as k increases. |

|

|

|

Smallest Maximum Denominator of a Unit Fraction Partition of 1 |

|

Page 161 of Guy's "Unsolved Problems in Number Theory" (2nd Ed) discusses expressing 1 as the sum of t distinct unit fractions. Letting m(t) denote the smallest possible maximum denominator in such a sum, the book notes that m(3) = 6 and m(4) = 12. These follow from the optimum 3-term and 4-term expressions |

|

|

|

|

|

|

|

However, the book goes on to say that m(12) = 120, whereas in fact m(12) is certainly no greater than 30. Here's a table of unit fraction expansions for t = 3 to 12: |

|

|

|

t denominators of unit fraction partitions of 1 |

|

--- ----------------------------------------------- |

|

3 2 3 6 |

|

4 2 4 6 12 |

|

5 2 4 10 12 15 |

|

6 3 4 6 10 12 15 |

|

7 3 4 9 10 12 15 18 |

|

8 3 5 9 10 12 15 18 20 |

|

9 4 5 8 9 10 15 18 20 24 |

|

10 5 6 8 9 10 12 15 18 20 24 |

|

11 5 6 8 9 10 15 18 20 21 24 28 |

|

12 6 7 8 9 10 14 15 18 20 24 28 30 |

|

|

|

Guy commented by email “This is indeed an error, and one which has been there since the first edition, 15 years ago. I must have miscopied or misunderstood it from Erdos or Graham. It's surprising that no-one has pointed it out earlier. I keep an updating file for UPINT and your message will be incorporated”. |

|

|

|

Intersecting Boats |

|

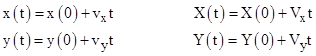

Suppose two boats A and B have known starting coordinates and speeds, but only boat A's heading is known. If the boats both travel in a straight line at constant speed, what heading will boat B need to take to intercept boat A? Also, at what location will boat A be intercepted? Let α denote the heading of boat A, such that α = 0 corresponds to a heading directly toward the initial location of boat B. Let β denote the heading of boat B on the same angular scale. Then β = invsin[ (v/V) sin(α) ] where v is the speed of A and V is the speed of B. In terms of xy coordinates, we can define parametric equations giving the coordinates x,y of A and X,Y of B as a function of time: |

|

|

|

|

|

|

|

We know the initial positions of both boats, and we know the components vx and vy of the velocity of A (because we know the heading and the speed of A). We don't know the individual components of B's velocity, but we know the magnitude V2 = Vx2 + Vy2. To find the point of intersection we set x(t) = X(t) and y(t) = Y(t), which gives |

|

|

|

|

|

|

|

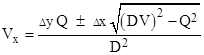

where Δy = y(0) - Y(0) and Δx = x(0) - X(0). If we define the quantities |

|

|

|

|

|

|

|

then the above equation can be solved to give |

|

|

|

|

|

|

|

and the similar equation for Vy. The previous equation then gives the time and coordinates of intersection. |

|

|

|

Testing for Prime Repunits |

|

Fermat's Little Theorem states that if m is a prime then bm = b (mod m) for every integer b. Therefore, if we can find an integer b for which bm is not congruent to b modulo m, we will have proven that m is composite. Take, for example, b = 2, and set m = (1071 - 1)/9. If m is a prime we would find that 2m = 2 (mod m). However, a little calculation shows that in fact 2m is congruent to |

|

|

|

8422611138482701447440679341973903379774416284229892522771978154015187 |

|

|

|

modulo m, so it follows that m is composite. It helps to have a multi-precision calculator or programming package to perform calculations of this kind. With UBASIC we can test for compositeness of base-10 repunits using the following little program. (Note that UBASIC uses the "@" symbol for "modulo".) |

|

|

|

10 INPUT "enter p"; p |

|

20 m = (10 ^ p - 1) \ 9 |

|

30 b = 2 : q = 1 |

|

40 FOR j = 1 TO p |

|

50 qt = q |

|

60 FOR k = 2 TO 10 |

|

70 q = (q * qt)@m |

|

80 NEXT k |

|

90 q = (q * b)@m |

|

100 PRINT j, q |

|

110 NEXT j |

|

|

|

The only values of p < 10000 that will give a final output of 2 are 2, 19, 23, 317, and 1031. This just means that they are "probably" primes, because composites that satisfy this test are extremely rare. However, it has been proven by other methods that these repunits are in fact primes. For a long time those five numbers were the only known prime repunits (in decimal), but in September 1999 Harvey Dubner found the next "probable prime" repunit with p = 49081. This most likely is a prime, but it would be prohibitive (by any known methods) to give a deterministic proof. |

|

|

|

Functions With No Zero Derivatives |

|

A number of simple transcendental functions, such as tan(x), ex, etc., have the property that their derivatives never vanish, no matter how many times we take the derivative, and the values of their derivatives at most rational arguments are also never zero. It's possible to express some well-known problems in terms of functions that have no zero derivatives. For example, consider the simple function |

|

|

|

|

|

|

|

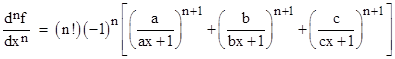

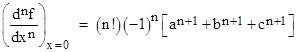

where a, b, and c are (non-zero) integers, positive or negative. The non-vanishing of every derivative of this function at x = 0 is easily seen to be equivalent to Fermat's Last Theorem, because the nth derivative is |

|

|

|

|

|

|

|

At x = 0 this reduces to |

|

|

|

|

|

|

|

which by Fermat’s Last Theorem cannot equal zero for any integers a,b,c. |

|

|

|

Minimum Difference Function |

|

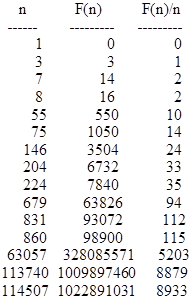

For any positive integer n let f(n) denote the minimum difference |a-b| for any two integers a,b such that ab = N. For example, f(n) = n-1 for any prime n, and f(n) = 0 if n is a square. Now we define F(n) as the sum of f(k) for k = 1, 2, .., n. How often is F(n) divisible by n? Here's a table of all the occurrences for n < 175000: |

|

|

|

|

|

|

|

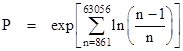

Presumably there are infinitely many such occurrences (the next two are with n = 660479 and n = 2329170), but what is their density? Is the gap between n = 860 and n = 63057 larger than would be expected? More generally, suppose f(n) is defined as a randomly selected integer in the range from 0 to n – 1, and define the cumulative function F(n) as the sum of f(k) for k = 1 to n. The probability that n divides F(n) is simply 1/n, because F(n) = F(n-1) + f(n), and since f(n) ranges from 0 to n-1, exactly one of those possible values makes F(n) divisible by n. Therefore, the probability that none of the values of n from 861 to 63056 divide the corresponding values of F(n) is given by |

|

|

|

|

|

|

|

An alternative to performing all the multiplications is to sum the logarithm of the product, as follows |

|

|

|

|

|

|

|

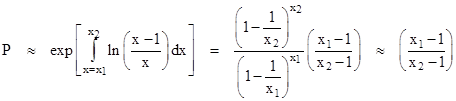

Converting the sum into an integral, this gives, for the probability of no solutions from n = x1 to n = x2, the expression |

|

|

|

|

|

|

|

where we’ve made use of the fact that (1 - 1/n)n approach 1/e for large values of n. Thus the probability of the empty span from 861 to 83056 (if the values of f(n) were randomly distributed) is (861-1)/(63056-1) = 0.014. |

|

|