|

Pairwise and Complete Independence |

|

|

|

Given a set of N true/false variables, each with a specified probability of being true, pairwise independence does not imply complete independence. To illustrate this, consider a system consisting of three components denoted by A, B, C, and suppose there is a series of trials, returning the states of these three components at the end of a sequence of missions. The eight possible joint states of the components are 000, 001, 010, 011, 100, 101, 110, 111. For simplicity we stipulate that each of them has probability 1/2 of being true (value=1) on any given trial. This signifies that the asymptotic ratio of trials with the component being true is 1/2. |

|

|

|

Since each of the three components has probability 1/2, one might naively think the probability of all being true would be (1/2)3 = 1/8, but that does not follow, because the components may not be statistically independent. In the extreme dependence case, with all three components being always in the same state, they would still each have probability of 1/2, but the probability of all three being true would also be 1/2. To ensure that the probability of the intersection equals the product of the probabilities of the components, we must be able to rule out this kind of dependency. |

|

|

|

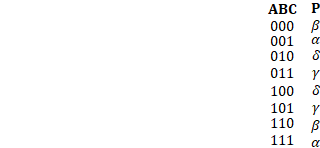

A necessary condition for this is for the components to be pairwise independent, meaning that (for example) the probability of C given A is the same as the probability of C given not-A, and similarly for the other pairs. However, pairwise independence is not a sufficient condition for complete independence. The table below shows the eight possible joint states along with symbols denoting their probabilities. |

|

|

|

|

|

|

|

Naturally, we have the normalizing condition α + β + γ + δ = 1/2 along with |

|

|

|

|

|

|

|

Subtracting pairwise gives α=δ and β=γ. Also, these associations of probabilities automatically ensure that each of the components has individual probability of 1/2, and that they are pairwise independent. For example, we have |

|

|

|

|

|

|

|

However, this does not ensure complete independence. For example, we have |

|

|

|

|

|

|

|

These two quantities sum to 1, but they need not equal each other. Recalling that α+β=1/4, we have |

|

|

|

|

|

|

|

If α = 1/8, then β = 1/8, and each of the joint states has equal probability 1/8, which implies that the probability of all three components being 1 (i.e., the 111 state) is (1/2)3 = 1/8. However, if we set α = 1/6 we have b = 1/12, in which case the probability of C given that A=B is 2/3, whereas the probability given A≠B is 1/3. In this case, the probability of the 111 state is 1/6, which exceeds the value of 1/8 that it would have if the events were totally independent. At the extremes, we could have α=1/4, β=0 or α=0, β=1/4. |

|

|

|

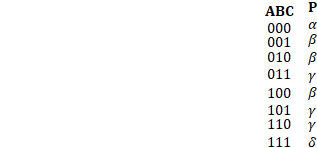

Another initial premise, ensuring symmetry between the three components, would be to assign probabilities to the states based on valency, as shown below. |

|

|

|

|

|

|

|

Here α designates the state with no bits set (no components failed), β the states with one set, γ the states with two set, and δ the state with all three set (all components failed). With these assignments, any permutation of the A,B,C components will leave the probabilities unchanged. We have |

|

|

|

|

|

|

|

We also have the conditional probabilities |

|

|

|

|

|

|

|

and likewise for every other pair. Now, letting q denote β+2γ+δ, and imposing the pairwise independence condition (meaning that P(A|B) = P(A), etc.), we have |

|

|

|

|

|

|

|

Thus if we define ε = δ − q3, we have |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

From the expression for γ we see that ε cannot be greater than q2 – q3, so the maximum possible value of δ (which is the probability of all three components being failed) given this symmetry and pairwise independence is q2. By the same reasoning, for a system of n components, under the conditions of symmetry and pairwise independence, the fully-failed state can have a probability up to qn−1, instead of the probability of qn for complete independence. |

|

|